Table of Contents

With the continuous advancement in technology, maintaining an efficient website is crucial for enhancing both user experience & search engine optimization (SEO). One of the prominent tools that can help you to seamless interact with search engines is Robots.txt file.

In this blog, we will guide you on how to block a spam domain in Robot.txt & why it is important. Along with this, we will provide a step-by-step guide that will navigate you through the process hassle-freely.

What is Robots.txt?

The Robots.txt file is a simple text file that is placed in the root directory of your website that instructs web crawlers, which are also known as robots or spiders, on how to interact with your site. It instructs these crawlers that which section/part of your site should not be indexed or accessed. This is particularly essential for managing site’s visibility and ensuring that sensitive or unnecessary data is not indexed by search engine.

The Robots.txt file use a specific syntax to communicate with the crawlers. For instance, you can use directives like “user – agent” to specify on which crawler the rule is being applied and “Disallow” to indicate which page, or part should not be indexed.

Why Block Spam Domains with Robots.txt?

Blocking spam domain is essential due to many reasons, like:

Protecting Your Site’s Reputation:

Spam domains can have negative impact on your site’s credibility. If search engines associate your site with spammy domains, it can harm your domain spam score and overall, SEO performance.

Improving User Experience:

Spam domains can lead to unwanted traffic and potential harmful interactions. By blocking these domains, you can enhance the user experience on your site.

Optimizing Crawl Budget:

Search engines allocate a specific budget for each site. By blocking spam domains, you ensure that crawlers focus on their valuable content rather than irrelevant and harmful pages.

How Mobile Apps Foster Loyalty?

Mobile apps can help build brand loyalty and enhance customer engagement by:

Preventing Duplicate Content Issues:

Spam domains can create duplicate content that can confuse search engines and dilute your site’s authority.

How to Block a Spam Domain in Robots.txt?

To block a spam using Robots.txt, you can add Disallow rule to all its User Agents, but this is only applicable when bots respect the file (for instance: User-agent: SpamBot Disallow: /). However, Robots.txt is not a security measure; as malicious bots always ignore it. For stronger protection, use server – level blocking (for example: .htaccess or nginx.conf to deny IPs, Cloudflare or firewall restrictions).

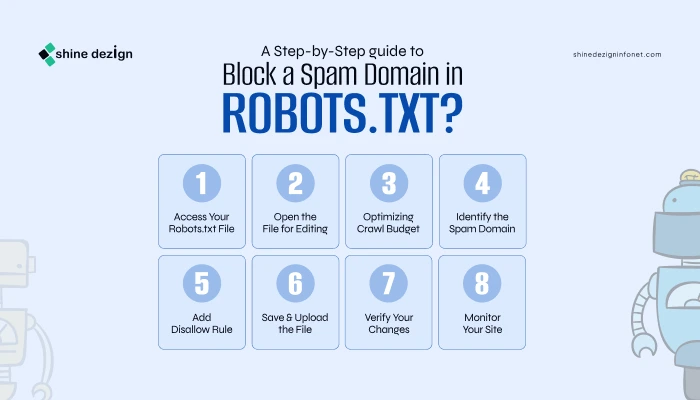

A Step-by-Step guide to Block a Spam Domain in Robots.txt

1. Access Your Robots.txt File:

1. Access Your Robots.txt File:

The Robots.txt file is generally located in the root directory of your website (for example, www.example.com/robots.txt). You can access it via your website’s file manager or FTP client.

2. Open the File for Editing:

Use a text editor to open the Robots.txt file. If you don’t have any, you can create a new text file and name it “robots.txt”.

3. Identify the Spam Domain:

Before you block a spam domain, you need to identify it. You can use tools to check domain spam score or check domain name for spam to determine if the domain is harmful or not.

4. Add Disallow Rule:

To block a specific domain, you can add the following lines to your robots.txt file:

User-agent: * Disallow: /path-to-spam-domain/

Replace “/path-to-spam-domain/” with the actual path you want to block. If you want to block all the pages from a specific domain, you can use:

User-agent: * Disallow: /

5. Save & Upload the File:

After making the necessary changes, save the file and upload it to the root directory of your Ecommerce website.

6. Verify Your Changes:

Use a Robots.txt checker tool to verify that your changes have been implemented correctly. This will also help you to confirm that the spam domain has also been blocked.

7. Monitor Your Site:

Regularly monitor your site for any new spam domains. You can use tools to check robots.txt and keep your file updated.

What are the Limitations of Robots.txt?

As the Robots.txt file is a powerful tool, but it still has limitations:

Not a Security Measure:

The Robots.txt is not a security measure. It just provides instructions to the compliant crawlers. Malicious bots may ignore these directories.

No Guarantee of Blocking:

Blocking a domain in Robots.txt does not guarantee that it will not be indexed. Some search engines may still index the page if it is linked with other sites.

Limited Control:

You cannot control specific URLs and parameters using Robots.txt. For more granular control you may need to use other methods such as meta tags, server – side configuration, and many other.

What are the Alternative Methods to Block a Spam?

There are several other methods to block a spam domain, these are:

Using Meta Tags

You can use meta tags to prevent the specific page from being indexed by the search engines. By adding the following meta tags to the “” section of your HTML, you can instruct search engines not to index any particular page

<meta name="robots" content="noindex,nofollow">

This method is particularly used for single pages that you want to keep out of search engine result without effecting the ranking of entire website.

Server – Side Blocking:

If you have access to your server configuration, you can block spam domains at the server level. For example, if you are using Apache, you can add rules to you “.htaccess” file to deny specific IP address or user agents associated with spam domains. Here’s an example:

RewriteEngine On

RewriteCond %{HTTP_REFERER} spamdomain\.com [NC]

RewriteRule ^.* - [F]

This rule will block any request coming from spamdomain.com.

Firewall Rules:

Implementing firewall rules can help you to block unwanted traffic from spam domains. Many web hosting services offer inbuilt firewall options that allow you to specify which IP address or domains to block. This is a more robust solution that can prevent spams before it even reaches your website.

Content Delivery Network (CDN) Security Features:

If you are using a Content Delivery Network (CDN), many of them come with security features that can help block spam traffic. For instance, services like Cloudflare offer options to block specific user agents, IP address or domains, & others. This can be effective way to block spam without requiring making any change in the robots.txt file.

Regular Monitoring and Maintenance:

Regularly monitoring your website for spam domain is necessary. Use tools to check domain spam score and keep track on your traffic sources. If you find any suspicious activity, then take immediate action to block these spam domains.

Summing Up

Blocking a spam domain is essential step in maintaining a healthy website. By understanding how to block a spam domain in robots.txt, you can protect your website’s reputation, improve overall user experience & optimize your crawl budget. As Robots.txt is a valuable tool, simultaneously it is necessary to understand its limitations and look for alternative methods for a more informed approach for blocking spam.